Avro Schema Editor & Design Tool

Avro Schema Design

Apache Avro is an open source project providing a data serialization framework and data exchange services often used in the context of Apache Kafka and Apache Hadoop, to facilitate the exchange of big data between applications. It is also used for efficient storage in Apache Hive or Oracle for NoSQL, or as a data source in Apache Spark or Apache NiFi.

It is a row-oriented object container storage format and is language-independent. Avro uses JSON to define the schema and data types, allowing for convenient schema evolution. The data storage is compact and efficient, with both the data itself and the data definition being stored in one message or file, meaning that a serialized item can be read without knowing the schema ahead of time.

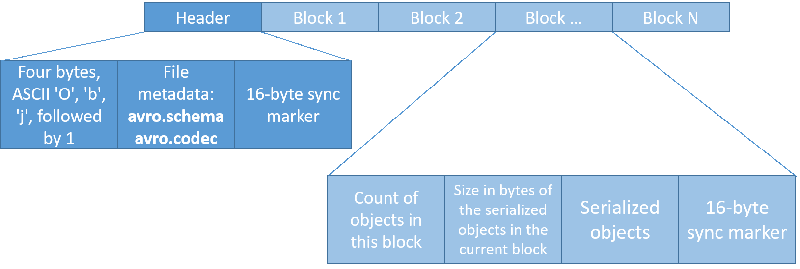

An Avro container file consists of a header and one or multiple file storage blocks. The header contains the file metadata including the storage blocks schema definition. Avro follows its own standards for defining schemas, expressed in JSON.

Avro schema provides future-proof robustness in streaming architectures like Kafka, when producers and an unknown number of consumers evolve on a different timeline. Avro schemas support evolution of the metadata and are self-documenting the data, making it future-proof and more robust.

Producers and consumers are further decoupled by defining constraints for the way schemas are allowed to evolve over time. These evolution rules can be published in a schema registry, as provided by Confluent, HortonWorks, or NiFi. With a schema registry, you may also increase memory and network efficiency by sending a schema reference ID in the registry instead of repeating the schema itself with each message.

Try Hackolade Studio for FREE

There's no risk, no obligation, and no credit card required!

Just access the application in your browser.

No credit card. No registration. No download. Runs in browser. No cookies. Local storage of models. Security first.

Avro Schema Evolution

As applications evolve, it is typical that the corresponding schema requirements change, with additions, deletions, or modifications to schema structure and fields. An important aspect of data management is to maximize schema compatibility and ensure that consumers are able to seamlessly read old and new data.

Backward compatibility means that data produced with an old version of the schema can be read with a newer version of the schema. Forward compatibility means that data written with a new version of the schema can be read with an older version of the schema. Full compatibility means that schemas are both backward and forward compatible: old data can be read with a newer version of the schema, and new data can be read with an older version of the schema.

When managing Avro schema, you may want to keep the following guidelines and best practices in mind:

- you may safely add, change, or remove non-mandatory field attributes, such as “doc” or “order”;

- you may safely change the sorting order of field attributes;

- you may safely add or remove field aliases;

- you may safely add a field, as long as it has a default value;

- you may safely add or change a default value to an existing field;

- do not remove a required field, unless it had a default value previously, or you will lose backward compatibility;

- do not change a field from data type long to int, or you will lose decimal data;

- do not change a required field to optional, or you will lose forward compatibility;

- you may rename optional fields with default values, but do not rename required fields;

- do not change field data types, or you will lose both forward and backward compatibility.

Avro Schema Design Tool

Hackolade has pioneered the field of data modeling for NoSQL databases and REST APIs, introducing a graphical software to perform the schema design of hierarchical and graph structures.

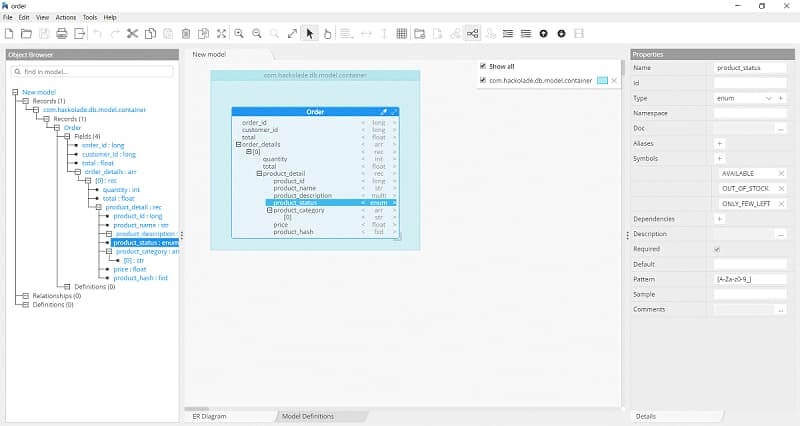

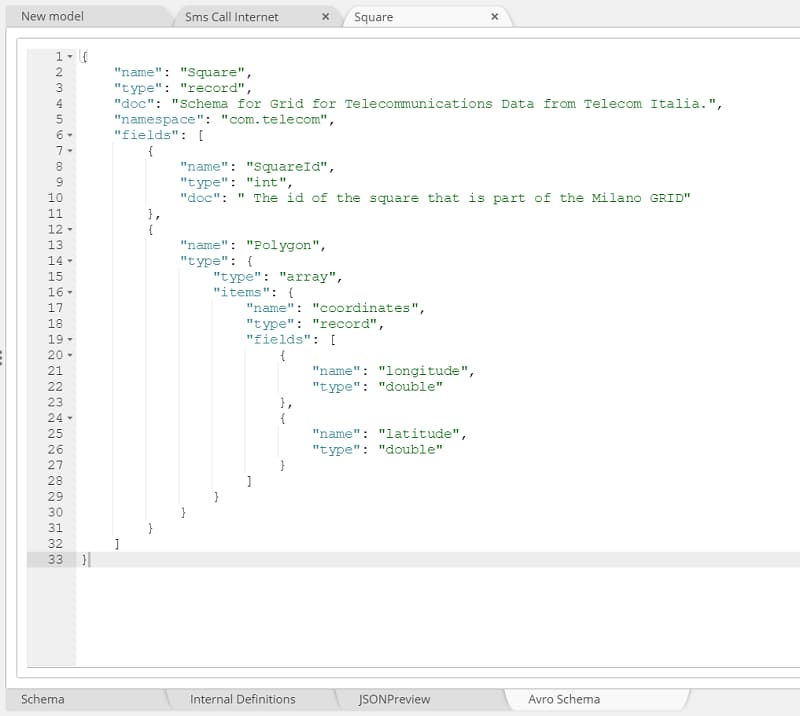

Hackolade is an Avro schema viewer and an Avro schema editor that dynamically forward-engineers Avro schema as the user visually builds an Avro data model. It can also reverse-engineer existing Avro files and Avro schema files so a data modeler or information architect can enrich the model with descriptions, metadata, and constraints.

Retrieving the Avro schema, Hackolade persists the state of the data model, and generates HTML documentation of the Avro schema to serve as a platform for a productive dialog between analysts, designers, architects, and developers. The visual Avro schema design tool supports several use cases to help enterprises manage their data.

Components of an Avro schema model

An Avro schema can be viewed as a language-agnostic contract for systems to interoperate. There are four attributes for a given Avro schema:

- Type: specifies the data type of the JSON record, whether its complex type or primitive value. At the top level of an Avro schema, it is mandatory to have a “record” type.

- Name: the name of the Avro schema being defined

- Namespace: a high-level logical indicator of the Avro schema

- Fields: the individual data elements of the JSON object. Fields can be of primitive as well as complex type, which can be further made of simple and complex data types.

Data types include primitive types (string, integer, long, float, double, null, boolean, and bytes) and complex types (record, enumeration, array, map, union, and fixed). There is also the case of logical types, which is an Avro primitive or complex type with extra attributes to represent a derived type.

Hierarchical view of nested objects

Complex types are easily represented in nested structures. This can be supplemented with detailed descriptions and a log of team comments gathered as the model adapts over time for the schema evolution.

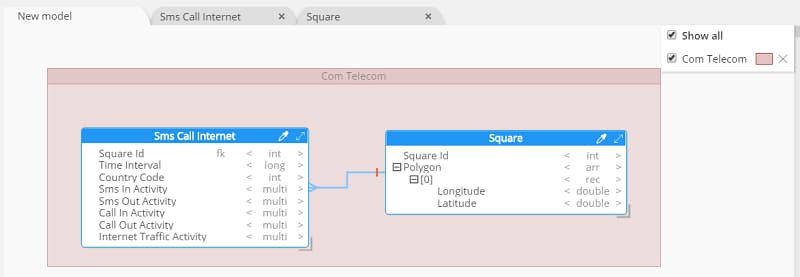

Entity Relationship Diagram (ERD)

Hackolade lets users visualize an Avro schema for different but related records via an Entity-Relationship Diagram of the physical data model.

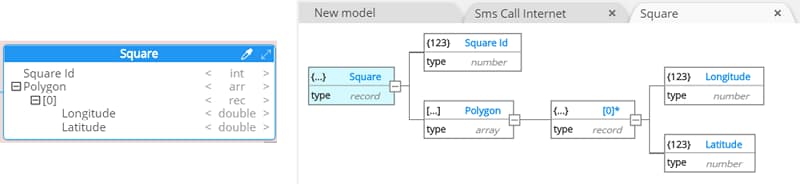

Polymorphism

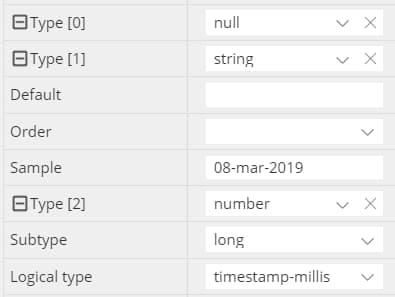

Polymorphism in the context of Avro is just a fancy word which means that a field can be defined with multiple data types. This can also be referred to as a union type.

An example of polymorphism found in data could be a date defined in two ways: a long number with timestamp-millis logical type (which represents the number of milliseconds since January 1, 1970, 00:00:00 GMT) or a string (dd-mmm-yyyy format). And since Avro does not provide the possibility to mark a field as optional, the simplest way would be to allow the field to also be a null type.

This would be represented in the following manner in JSON for Avro schema:

{

"name": "date_of_creation",

"type" : [

"null",

"string",

{

"type" : "long",

"logicalType" : "timestamp-millis"

}

]

}

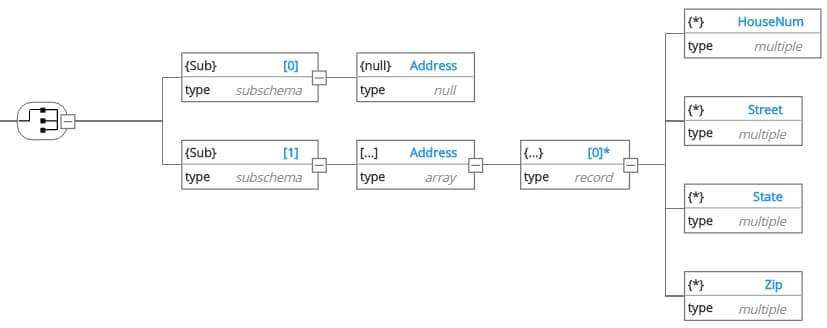

Things get more complex when the combination of data types includes a complex type. But thanks to JSON Schema notation, we can represent this structure of an optional address complex type object:

Outputs of a Visual Schema Editor for Avro

In addition to the dynamic Avro schema creation which facilitates development, Hackolade provides a rich, human-readable HTML report, including diagrams, records, fields, relationships and all their metadata. Many additional features have been developed to help data modelers.

Benefits of Avro Schema Design

A model-first approach advocates to design the contract between producers and consumers prior to writing any application code: it is effective, it helps consumers understand data structures quickly, and it reduces the time to integrate. A consistent design decreases the learning curve and promotes higher reuse and understanding of complex data-centric enterprises. Hackolade increases data agility by making data structures transparent and facilitating its evolution. The benefits of schema design for Avro are widespread and measurable.

Model-first schema design is a best practice to ensure that applications evolve, scale, and perform well. A good data model helps reduce development time, increase application quality, and lower execution risks across the enterprise.

Anticipate future schema evolution

The structure of your data will change over time. Effective data modeling helps ensure compatibility and smooth transition between different versions of the schema. By carefully designing the schema, you can plan for backward and forward compatibility, enabling seamless evolution of your data in a Kafka environment.

Facilitate message validation

Schemas can define the structure, data types, and constraints of the data. By designing a schema that accurately represents your data, you can facilitate schema validation during message production or consumption, ensuring that the messages being produced or consumed adhere to the expected structure and prevent data inconsistencies and errors.

Optimize message payload

The structure and organization of the Avro schema directly impact the efficiency of message serialization and deserialization. Effective data modeling helps optimize the schema design to minimize message size, reduce network overhead, and improve overall performance in a Kafka cluster.

Enable compatibility and interoperability

Well-designed data models provide a common understanding and interpretation of the data structure, enabling seamless interoperability between different systems. This allows producers and consumers to exchange and process Avro messages reliably and consistently.

Integrate with the Confluent Schema Registry

Effective data modeling ensures that the schema design aligns with the capabilities and requirements of the registry. It enables versioning, compatibility checks, and schema evolution management, facilitating a robust and scalable schema governance process.

Define union schemas with references

Define complex data structures that involve multiple data types and reference existing schemas. Promote schema reuse and a modular approach to schema design, and maintain schema consistency across different data models and applications within your Kafka ecosystem.

Object Storage

In the context of large-scale distributed systems like data lakes, data is often stored in object storage solutions like Amazon S3, Azure ADLS, or Google Cloud Storage. Avro can be used to serialize the data into binary format then be stored in the object storage system as a file, making it easily accessible for processing and analysis.

With Hackolade Studio, you can reverse-engineer Avro files located on:

- Amazon S3

- Azure Blog Storage

- Azure Data Lake Storage (ADLS) Gen 1 and Gen 2

- Google Cloud Storage

Schema Registries

A key component of event streaming is to enable broad compatibility between applications connecting to Kafka. In a large organizations, trying to ensure data compatibility can be difficult and ultimately ineffective, so schemas should be handled as “contracts” between producers and consumers.

The main benefit of using a Schema Registry is that it provides a centralized way to manage and version Avro schemas, which can be critical for maintaining data compatibility and ensuring data quality in a Kafka ecosystem.

Hackolade Studio supports Avro schema maintenance in:

Schemas can be published to the registry via forward-engineering, or reverse-engineered from these schema registries.

Free trial

To experience the first visual Avro schema editor and try the full experience of Hackolade Studio free for 14 days, download the latest version of Hackolade Studio and install it on your desktop. There's no risk, no obligation, and no credit card required! The software runs on Windows, Mac, and Linux, plus it supports several other leading NoSQL databases. Or you can run the Community edition in the browser.

Try Hackolade Studio for FREE

There's no risk, no obligation, and no credit card required!

Just access the application in your browser.

No credit card. No registration. No download. Runs in browser. No cookies. Local storage of models. Security first.